The DevSecOps movement is all about shifting left; empowering the development teams to make more hygienic decisions. With the pace and velocity that engineering teams are creating changes/features, in days gone by, security could be seen as an afterthought in the SDLC. Today, modern teams and organizations try to disseminate application security expertise throughout the development pipeline.

The StackHawk Platform aims to bring Dynamic Application Security Testing (DAST) to your CI/CD pipeline. DAST tools typically run against running applications to identify security vulnerabilities present in the running application. With the increase in containerization of applications, having an isolated running instance of your application is easy to achieve. Combined with your containerized applications, StackHawk and Harness are a prudent choice in your DevSecOps journey. In this example, we will go through scanning an application before deploying the application to Kubernetes. All of the code snippets are also available on GitHub Gist.

Getting Started With DevSecOps

The goal for this example is to introspect a running container/application with a DAST tool and have the results influence the deployment. A great example application, if you don’t have one handy, is StackHawk’s purpose-built vulnerable Node application.

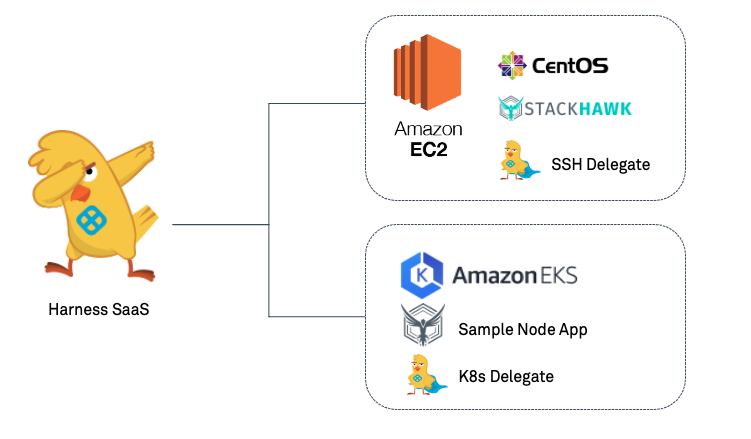

Leveraging the Harness Platform to deploy to Kubernetes, the easiest way is to deploy a Harness Delegate into the Kubernetes cluster. There are several approaches to invoke a StackHawk scan against a running container. In this example, we will leverage a static EC2 instance to build the sample Docker Image and invoke the StackHawk scan. A Harness SSH Delegate will make interactions on the EC2 instance simple.

Platform Prerequisites

If this is your first time, make sure to sign up for accounts on StackHawk and Harness.

StackHawk Setup

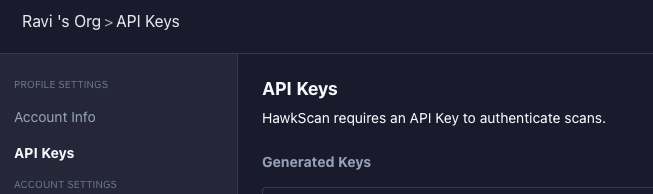

Once signed into your StackHawk account, make sure to copy down the API Key(s) you will be using to authenticate against.

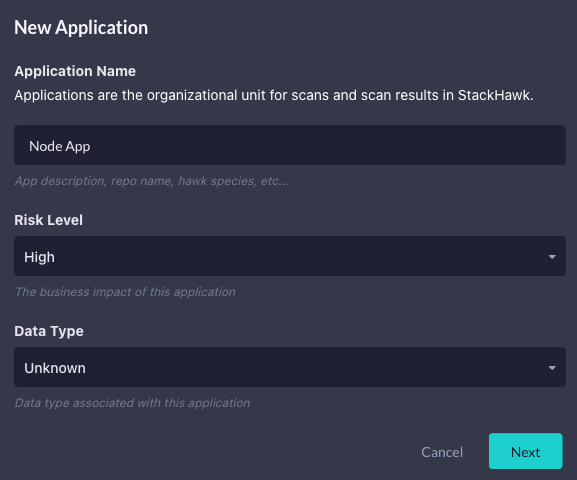

StackHawk works off a concept of Applications. For this example, click on Add an App and create an Application named “Node App.”

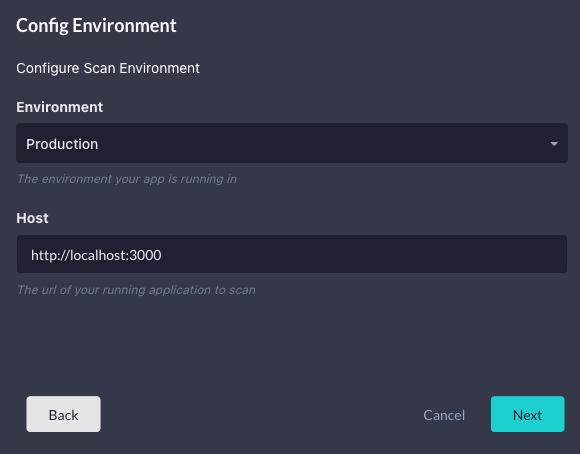

Once you click Next, you can configure the environment. The sample application binds to port 3000. Set the host as “http://localhost:3000.” Though, when using StackHawk, the configuration [stackhawk.yml] can be modified/created to be the prudent host address after the application is created.

Once you click Next, you will be presented with an Application ID. Make sure to save that ID; also, you can save the generated stackhawk.yml, which will contain the ID.

You are now ready for the Harness Delegate installations.

Harness Setup

For this example, you will be installing an SSH and Kubernetes Harness Delegate.

EC2/SSH

Setup -> Harness Delegates -> Install Delegate

Download Type: Shell Script

Name: ec2

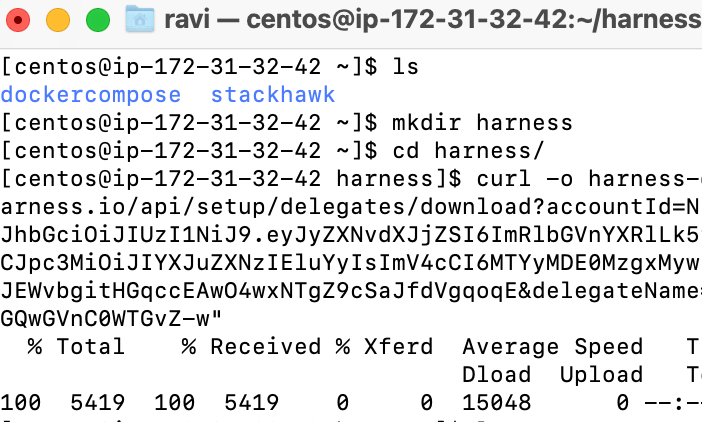

Click on the Copy Download Link. Then, log into a terminal session to the EC2 instance.

Create a directory called “harness” and cd into that directory.

mkdir harnesscd harness

Then, paste the copied download link to download into the “harness” folder.

Once downloaded, you can install the SSH Delegate.

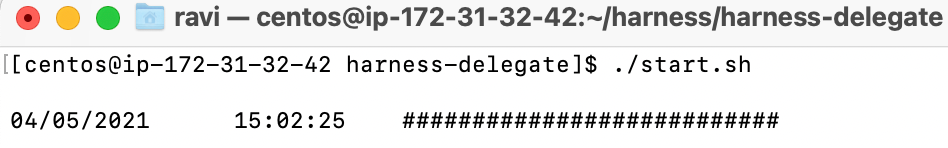

#Install SSH Delegate

tar xfvz harness*.tar.gz

cd harness-delegate

./start.sh'

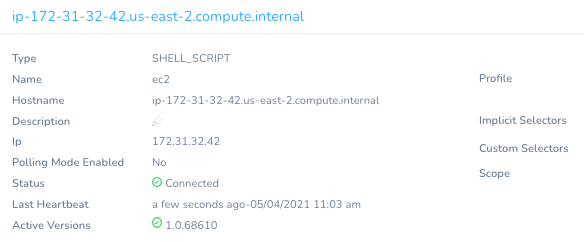

In a few moments, the SSH Delegate will appear in the Harness UI.

The next step will be to install the Harness Kubernetes Delegate.

Kubernetes

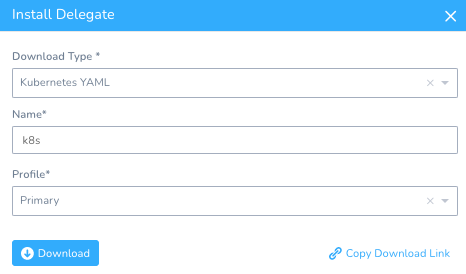

Setup -> Harness Delegates -> Install Delegate

Download Type: Kubernetes YAML

Name: k8s

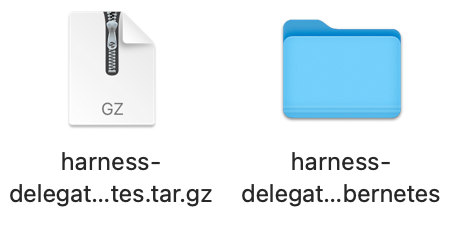

Download and expand the tar.gz to your local machine.

The kubectl commands will be inside the READEME.txt to apply the manifest.

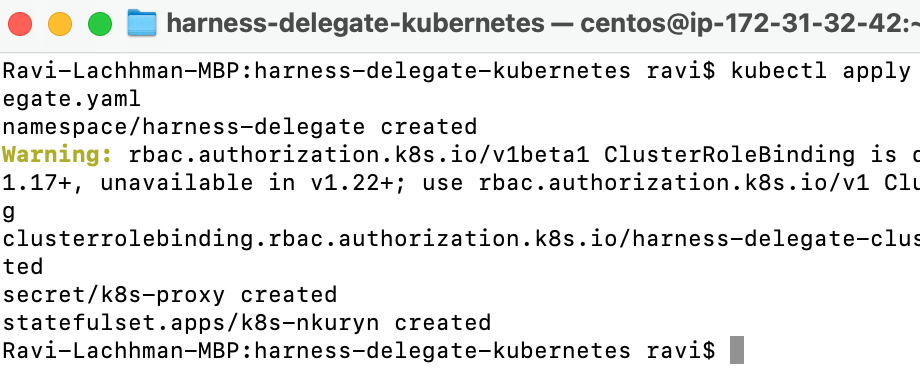

Run “kubectl apply -f harness-delegate.yaml”

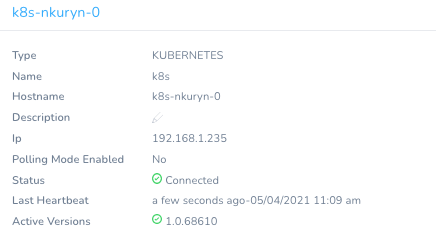

In a few moments, the Kubernetes Delegate will be ready.

Now you are ready to assemble the pipeline.

Your First DevSecOps Pipeline

Harness works on a concept of an Abstraction Model. Basically assembling all of the pieces needed for a pipeline.

Wire Kubernetes Cluster to Harness

Wiring the Kubernetes cluster to Harness is very easy, especially with a deployed Harness Delegate.

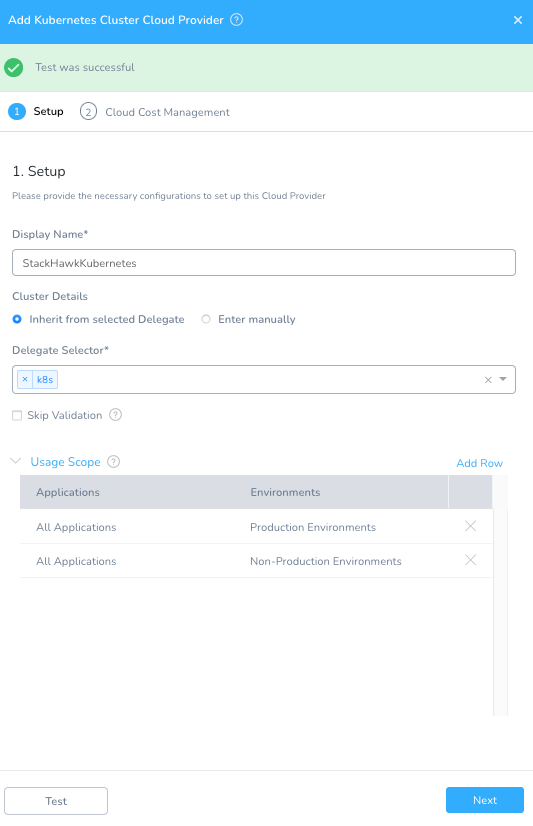

Setup -> Cloud Providers -> +Add Cloud Provider

Type: Kubernetes Cluster

Display Name: StackHawkKubernetes

Cluster Details: Inherit from selected Delegate

Delegate Selector: k8s

Click Test to validate and click Next. Depending on your account, you will be prompted if you want to analyze the cluster workloads for cost savings. You can opt in or out. Once added, Harness can now interact with your cluster.

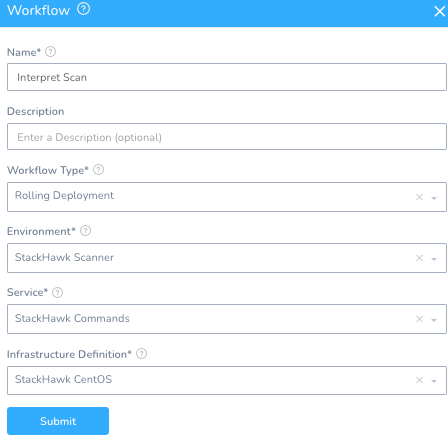

Creating a Harness Application

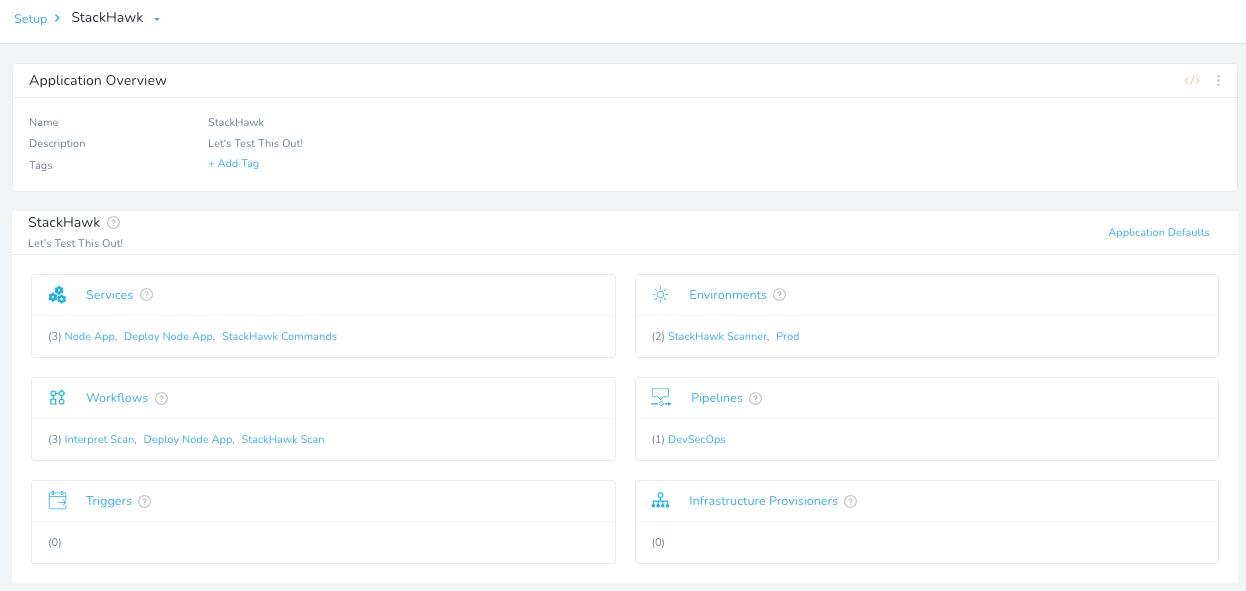

The lifeblood of Harness is a Harness Application.

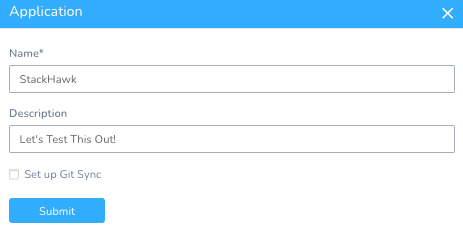

Setup -> Add Application

Name: StackHawk

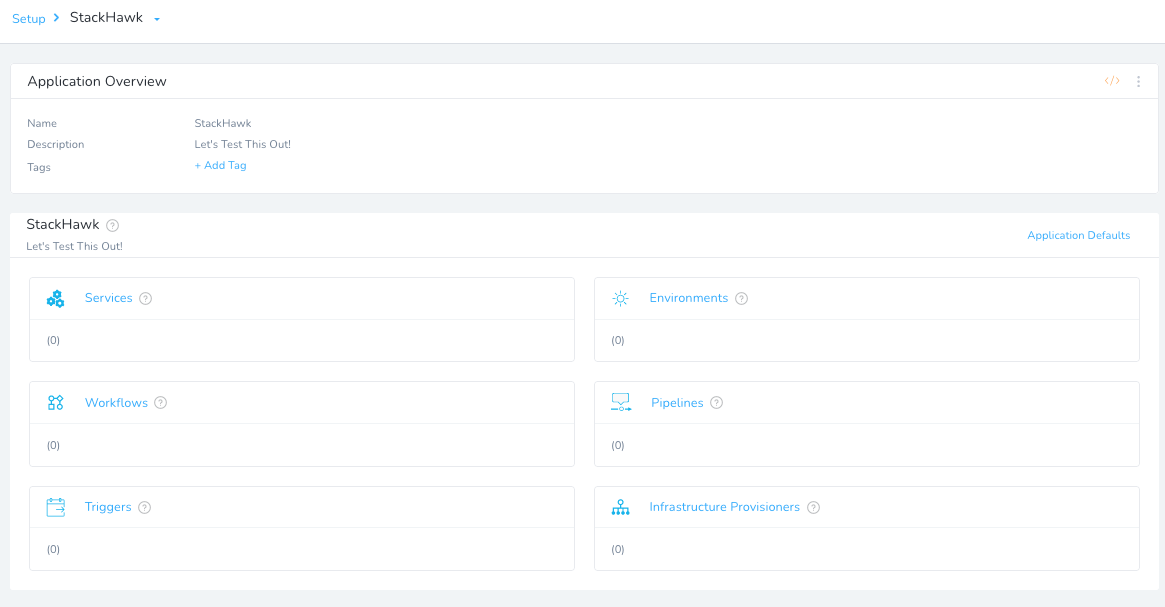

Once you click Submit, you will be greeted with a blank Continuous Delivery Abstraction Model.

Creating a Harness Environment

A Harness Environment is the “where am I going to deploy to?” Environments can span multiple pieces of infrastructure. In this example, we will point to the Kubernetes cluster. Inside your StackHawk Harness Application, add an Environment.

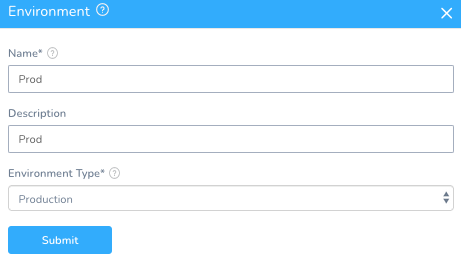

Setup -> StackHawk -> Environments + Add Environment

Name: Prod

Description: Prod

Environment Type: Production.

Once you hit Submit, can add the Kubernetes Cluster as an Infrastructure Definition.

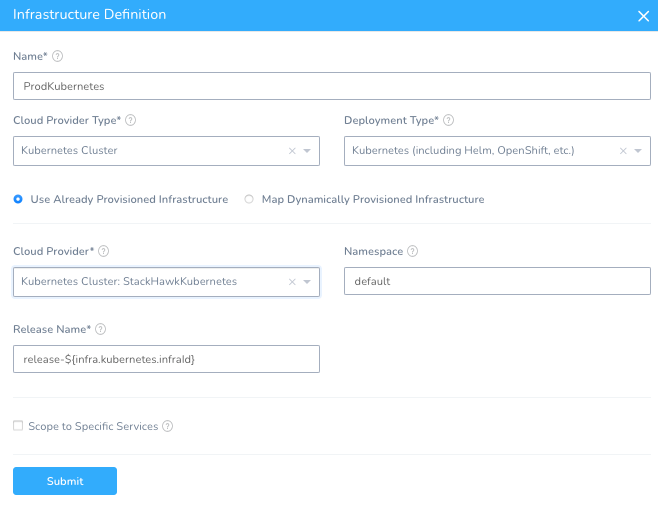

Setup -> StackHawk -> Environments -> Prod + Add Infrastructure Definition

Name: ProdKubernetes

Cloud Provider Type: Kubernetes Cluster

Deployment Type: Kubernetes

Cloud Provider: StackHawkKubernetes

Once you hit Submit, you can now deploy to your Kubernetes Cluster. If leveraging the sample application from GitHub, you will need to build that application or bring your own image.

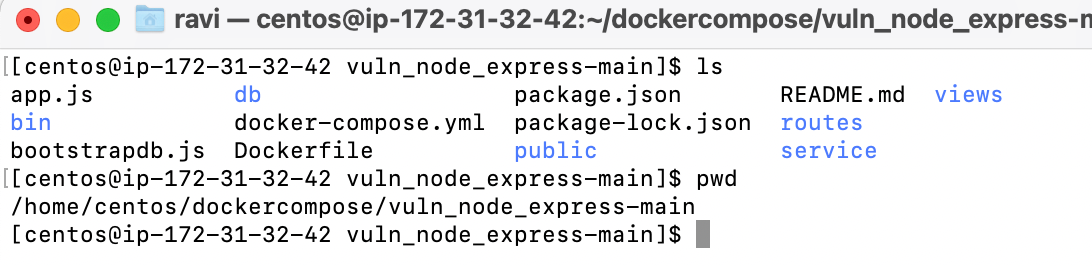

Building the StackHawk Sample Application

There are instructions to build the sample application in StackHawk’s GitHub Project. If you have not played too much with Docker, an easy path would be to fork their project into your personal GitHub Account, then download the contents of that project onto the EC2 instance and build from there. Certainly the art of the possible to leverage a CI Platform such as Drone or Harness Continuous Integration to build.

Clone and Modify Docker Compose

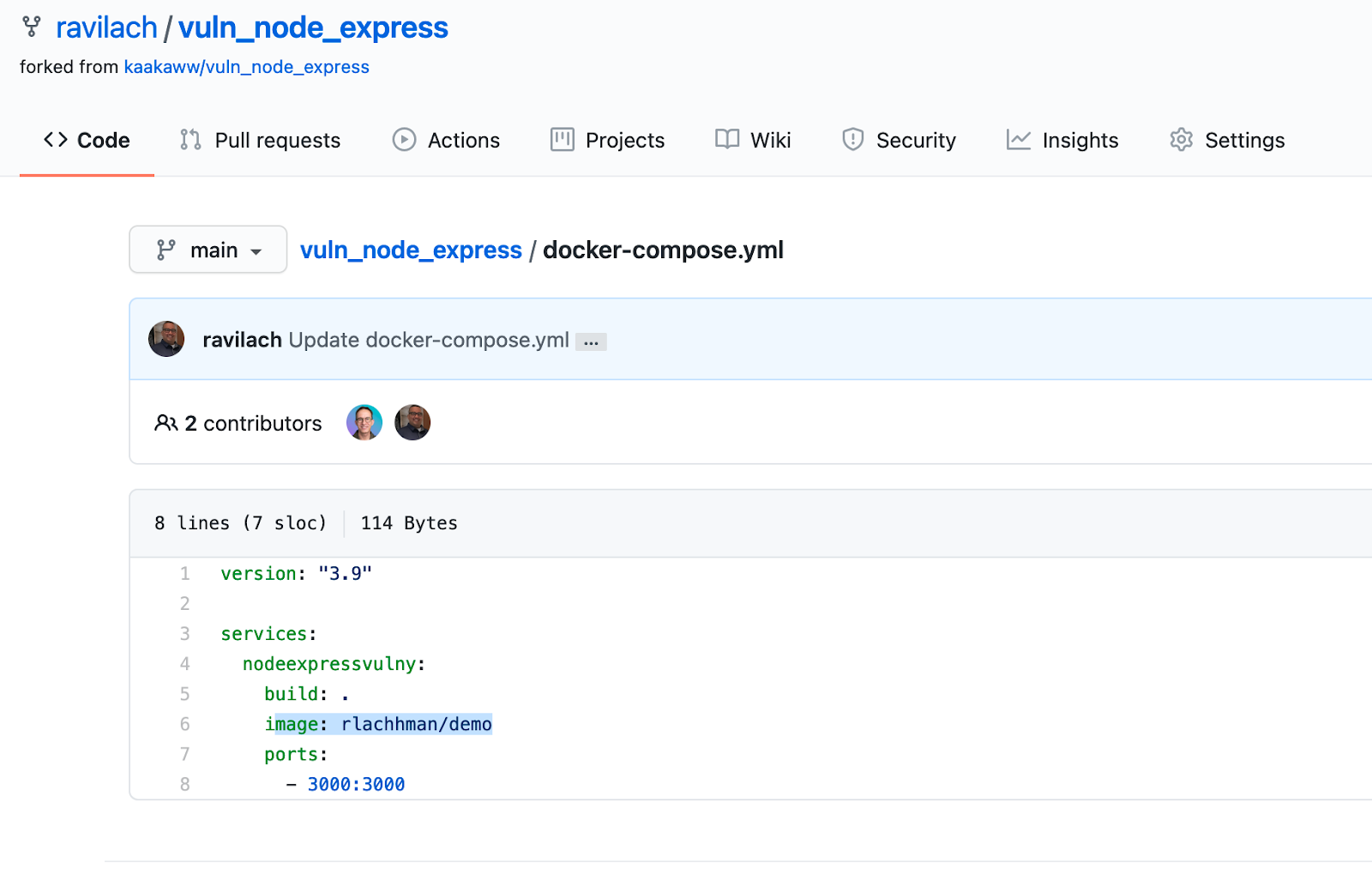

You can fork StackHawk’s project into your own account. For more control over the image name, modify the Docker Compose [docker-compose.yml] with your own image name. For this example, I am publishing to my own Docker Registry [rlachhman/demos:stackHawk], which you can also Docker Pull.

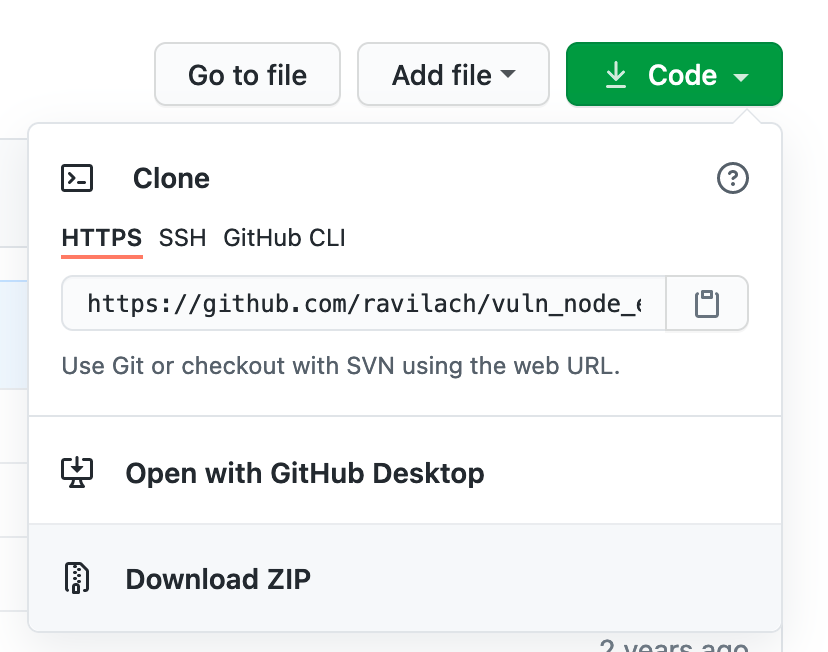

Once you have done that, you can wget the zip of the files to your EC2 instance or leverage a CI Platform. To find out the address of the zip, you can click Code and Download ZIP in GitHub.

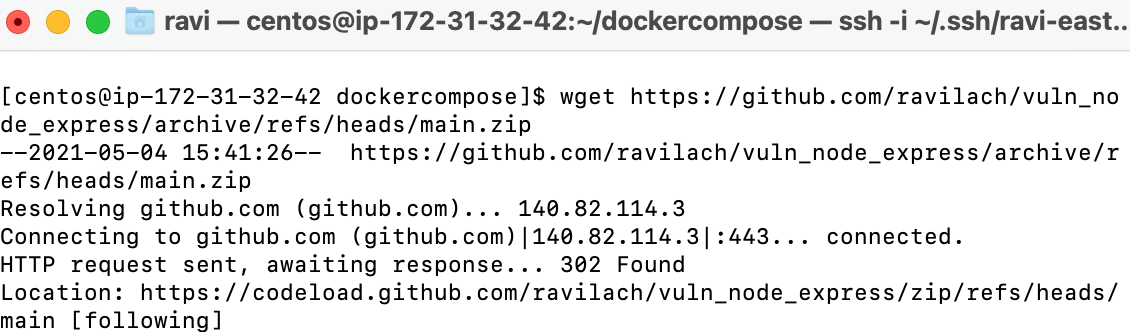

#Get Files

sudo yum install unzip

wget https://github.com/ravilach/vuln_node_express/archive/refs/heads/main.zip

unzip main.zip

Build With Docker Compose or Docker Pull Ready Image

You can either build from source with Docker Compose or leverage an already built image.

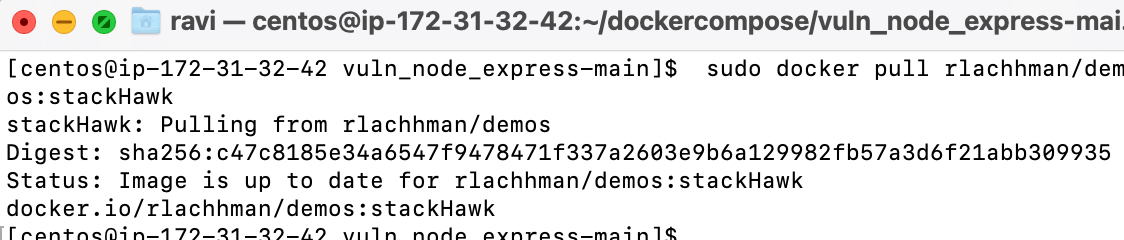

Docker Pull

If you want to skip the Docker Compose step, you can Docker Pull a ready-made image.

#Docker Pull

sudo docker pull rlachhman/demos:stackHawk

You can use Docker Compose to build the image also and modify the name.

Docker Compose

If this is your first time leveraging Docker Compose, you will need to install Docker Compose on an EC2 machine. Below are the instructions for the EC2 instance of CentOS, assuming Docker Runtime is up. Potentially, you can add this to a Delegate Profile so it installs with the Harness SSH Delegate.

If you don’t have Docker CE running on your CentOS machine:

#Install Docker

https://docs.docker.com/engine/install/centos/

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker-ce docker-ce-cli containerd.io

sudo systemctl start dockerThe Docker Compose Install:

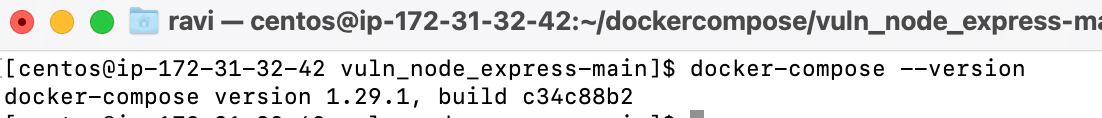

#Docker Compose Install

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

docker-compose --version

Once that is complete, back in the root directory of the GitHub project download and you are now ready to run Docker Compose.

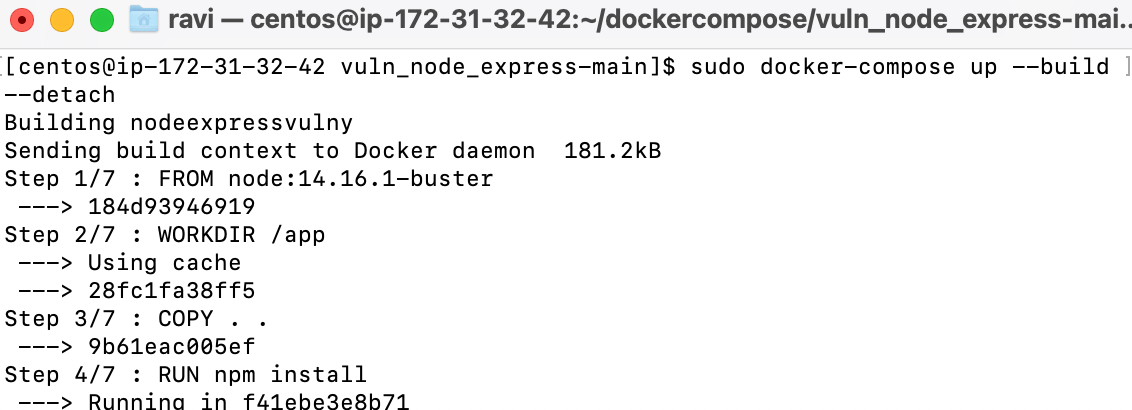

Run Docker Compose Up.

sudo docker-compose up --build --detach

You will need to publish the artifact to a Docker Registry. The easiest way would be to publish to a personal Docker Hub account. Simply running a docker login with your Docker Hub credentials and then a docker push to publish.

With the Docker Compose steps out of the way, it’s now time to start building the pipeline.

Calling StackHawk from Harness

There are several ways to integrate software into your Harness Workflow. For this example, we would like to run Shell Scripts on our EC2 instance to call StackHawk and then parse the results. The easiest way is to define the EC2 instance as an endpoint [Cloud Provider] so we can manage these shell scripts in a Harness Workflow.

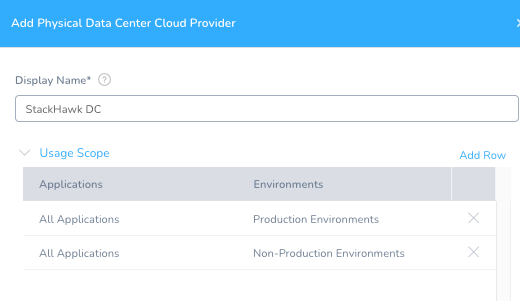

Setup -> Cloud Providers -> + Add Cloud Provider. The type is Physical Data Center. You can name the Provider “StackHawk DC.”

Once you hit Submit, the StackHawk DC will appear.

Back in the StackHawk Application, you can add a Harness Environment mapped to the new Cloud Provider.

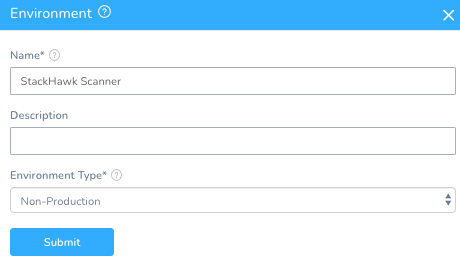

Setup -> StackHawk -> Environments + Add Environment

Name: StackHawk Scanner

Environment Type: Non-Production

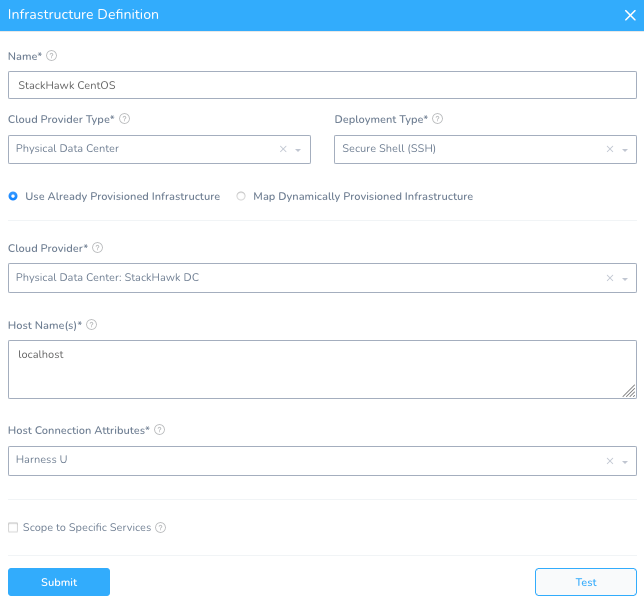

Like the previous Kubernetes Harness Environment, you will need to create an Infrastructure Definition.

Name: StackHawk CentOS

Cloud Provider Type: Physical Data Center

Deployment Type: SSH

Cloud Provider: StackHawk DC

Host Name: localhost

Connection Attribute: Any

Hit Submit – you are now wired to the EC2 instance.

Harness has the ability to orchestrate multiple types of deployments from Shell, Serverless, Kubernetes, and beyond. You can leverage a Harness Service as the conduit to execute the StackHawk commands across the pipeline.

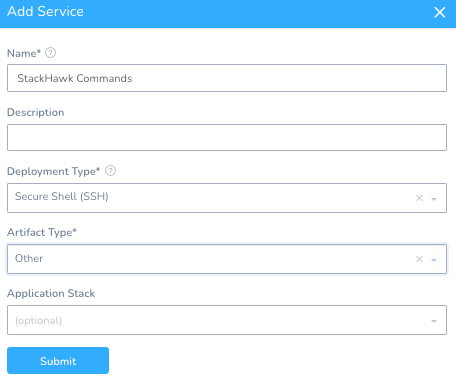

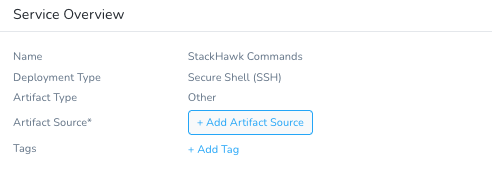

Create a new Harness Service for the StackHawk Commands.

Setup -> StackHawk -> Services + Add Service

Name: StackHawk Commands

Deployment Type: Secure Shell (SSH)

Artifact Type: Other

Click Submit – now you can use this Service to deploy against.

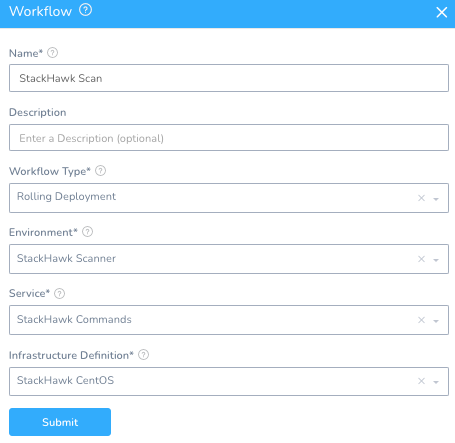

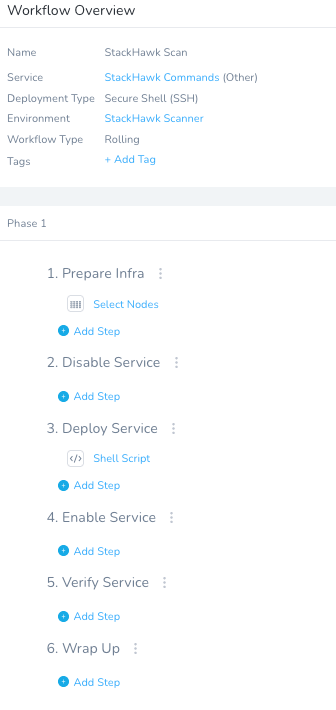

To have Harness management the commands needed to execute a StackHawk scan, create a Harness Workflow representing that.

Setup -> StackHawk -> Workflows + Add Workflow

Name: StackHawk Scan

Workflow Type: Rolling Deployment

Environment: StackHawk Scanner

Service: StackHawk Commands

Infrastructure Definition: StackHawk CentOS

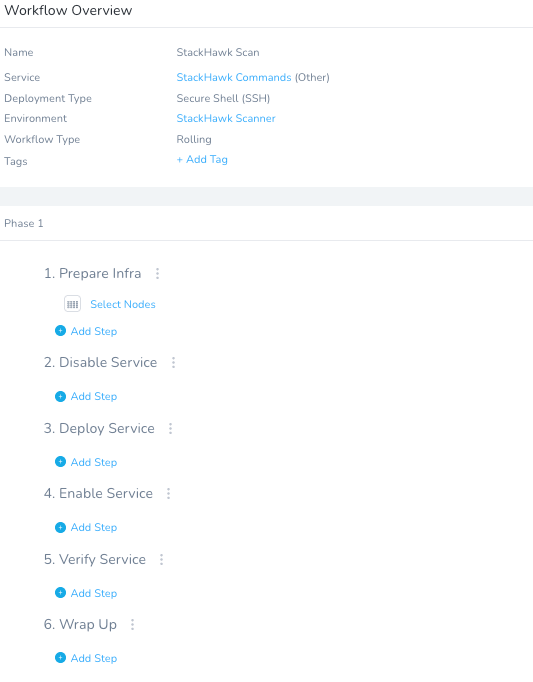

Once you hit Submit, a templated Workflow will be generated. The Phases, names, and order can be changed, condensed, etc.

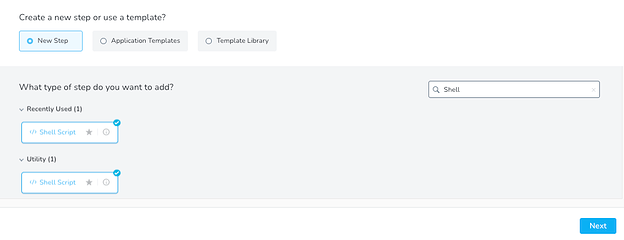

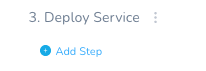

You can slot in the StackHawk Call under Step 3 “Deploy Service” by clicking + Add Step. In the Add Step UI, search for “Shell” and select Shell Script.

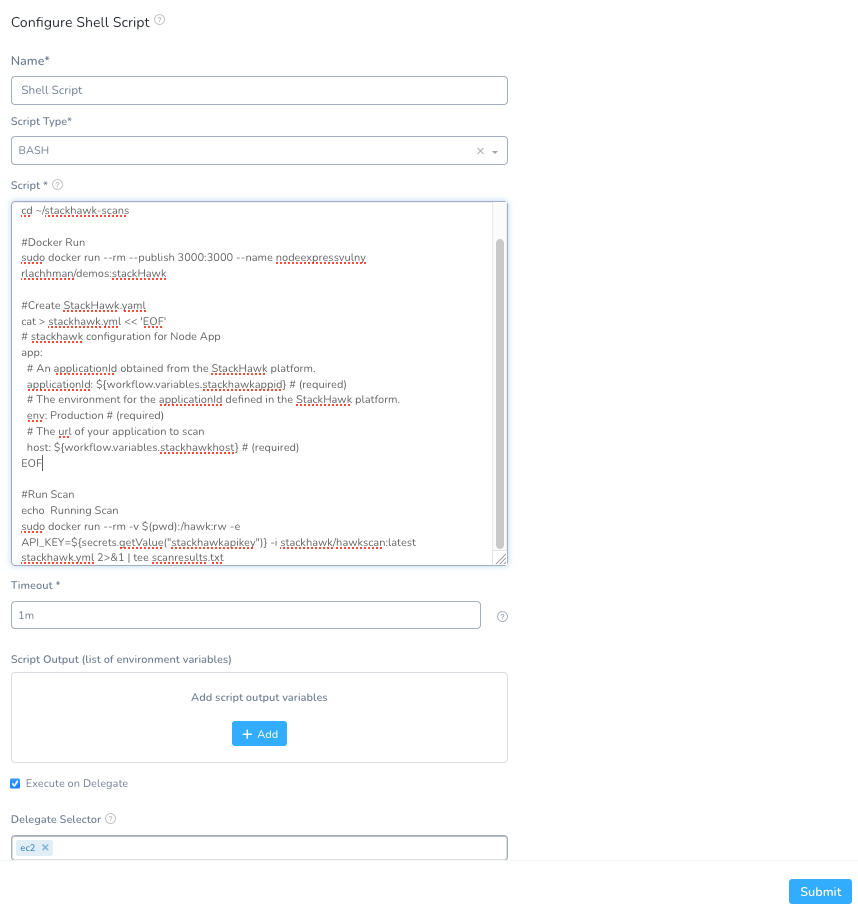

Click Next. Fill out a shell script around what a StackHawk scan requires. Make sure to select the Delegate Selector (ec2) that was assigned to the Shell (e.g. the CentOS) Delegate. The pieces would be generating the stackhawk.yml and running both the StackHawk scanner and invoking the Docker Image to a running container of the vulnerable application. This script takes in variables from the Harness Secret Manager and the Workflow itself. It will be configured after this step.

Depending on what you named your Docker Image from the Docker Compose, you will need to modify the Docker Run command to reflect the new image name and tag.

Name: Shell Script

Type: Bash

Execute on Delegate: checked

Delegate Selector: ec2

Script:

#Make DIR and CD

mkdir -p ~/stackhawk-scans

cd ~/stackhawk-scans

#Docker Run if Needed

sudo docker run --rm --publish 3000:3000 --name nodeexpressvulny rlachhman/demos:stackHawk

#Create StackHawk.yaml

cat > stackhawk.yml << 'EOF'

# stackhawk configuration for Node App

app:

# An applicationId obtained from the StackHawk platform.

applicationId: ${workflow.variables.stackhawkappid} # (required)

# The environment for the applicationId defined in the StackHawk platform.

env: Production # (required)

# The url of your application to scan

host: ${workflow.variables.stackhawkhost} # (required)

EOF

#Run Scan

sudo docker run --rm -v $(pwd):/hawk:rw -e API_KEY=${secrets.getValue("stackhawkapikey")} -i stackhawk/hawkscan:latest stackhawk.yml 2>&1 | tee scanresults.txt

Click Submit – now the Workflow has the commands to execute a scan.

The above script will take in inputs for the StackHawk API Key, AppID, the running address of your application, and then export the scan results into a local file.

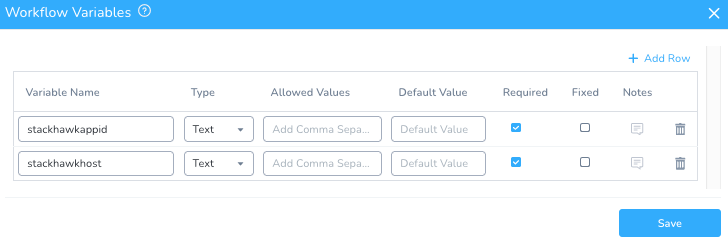

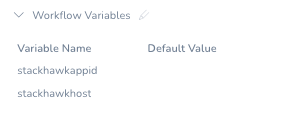

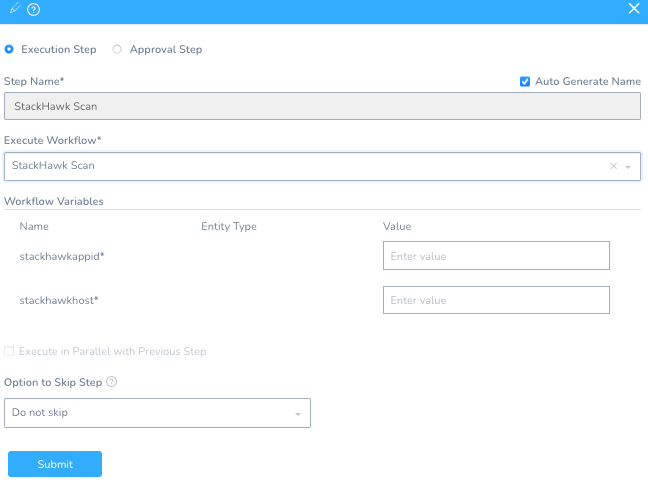

A benefit of wrapping this in a Harness Workflow you can prompt users for items. You can set up two variables in the workflow to prompt for the StackHawk AppID and the address of the running image.

In the StackHawk Scan Workflow on the right, click on Workflow Variables and add the following.

Variable Name: stackhawkappid

Type: Text

Required: checked

Variable Name: stackhawkhost

Type: Text

Required: checked

Click Save and the Workflow Variables are in place.

If you would like to add additional variables, they can be accessed in the following format:

${workflow.variables.variableName}

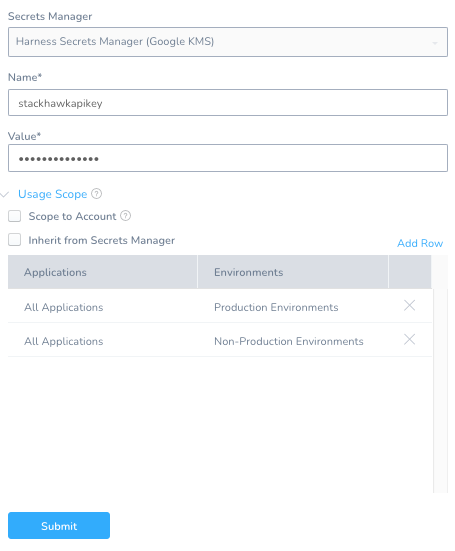

Next, use Harness’ Secret Manager to store your StackHawk API Key.

Security -> Secrets Management + Add Encryped Text

Name: stackhawkapikey

Value: your_api_key

Click Submit. You are now able to access the Secret. Accessing secrets is similar to accessing a workflow variable.

${secrets.getValue(“key_name”)

With the basic scan out of the way, now it is time to create some deployment logic.

Deployment Pipeline

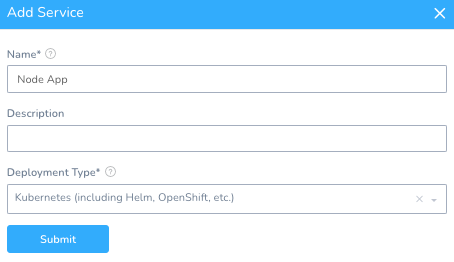

Eventually, you will want to deploy the application. The first step back in the Harness Platform is to create a Harness Service for the Kubernetes deployment.

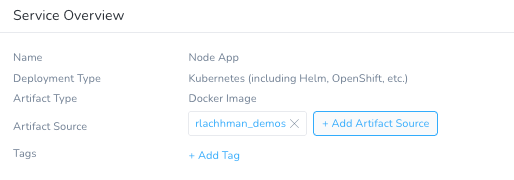

Setup -> Services + Add Service

Name: Node App

Deployment Type: Kubernetes

Once you click Submit, Harness will create the Kubernetes scaffolding needed for a deployment.

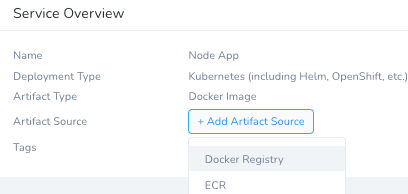

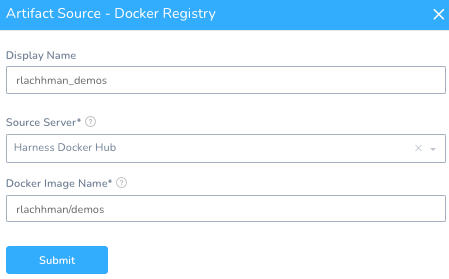

Harness will need to know about what artifact to deploy by clicking + Add Artifact Source in the Service and selecting Docker Registry.

Depending on if you ran the Docker Compose from scratch, you will need to replace the Image Name with yours. If using the pre-baked one, leverage the following.

Source Server: Harness Docker Hub [this is enabled to public Docker Hub by default].

Docker Image Name: rlachhman/demos [or your repo/name].

Once you hit Submit, your Service is ready to be deployed.

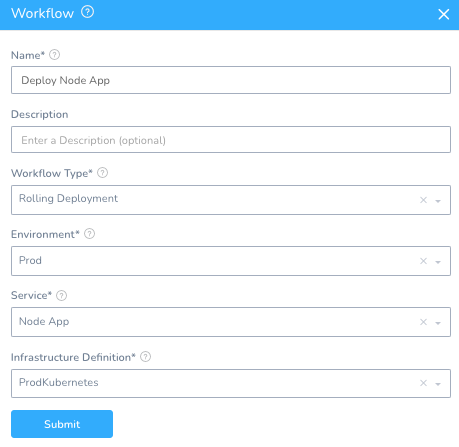

You will need to add a Harness Workflow defining the steps how to deploy. Fairly simple for Kubernetes.

Setup -> StackHawk -> Workflows + Add Workflow

Name: Deploy Node App

Workflow Type: Rolling Deployment

Environment: Prod

Service: Node App

Infrastructure Definition: ProdKubernetes

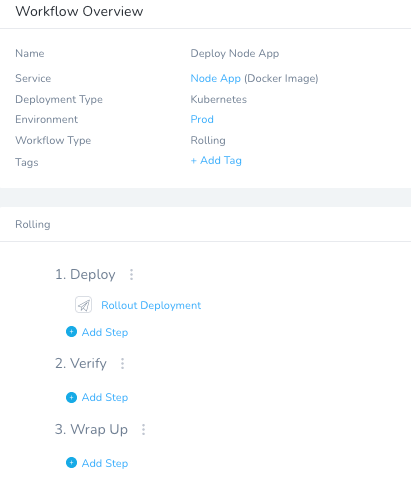

Once you click Submit, your Node App has a Workflow to deploy.

The last remaining steps are to interpret the results and stitch the Workflows together in a cohesive DevSecOps Pipeline.

Automate Your DevSecOps Pipeline

Automation is key to DevSecOps. Since StackHawk scans are executed programmatically in the pipeline, they can also be interpreted programmatically.

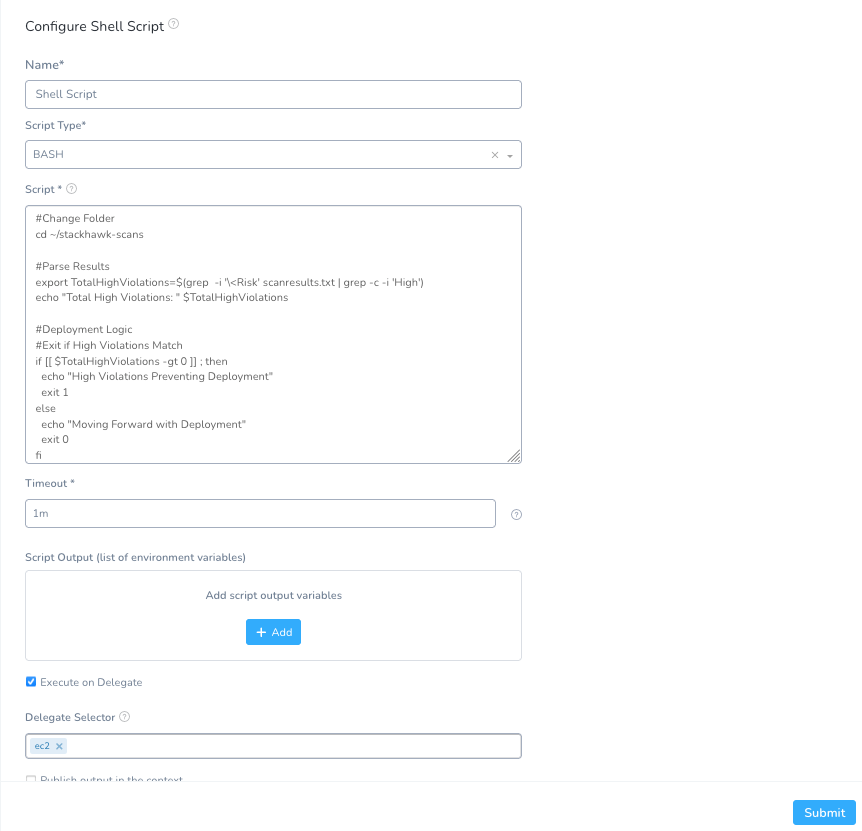

Similar to executing the scan in a Shell Script, you can create another Harness Workflow to interpret the scan results.

Setup -> StackHawk -> Workflows + Add Workflow

Name: Interpret Scan

Workflow Type: Rolling Deployment

Environment: StackHawk Scanner

Service: StackHawk Commands

Infrastructure Definition: StackHawk CentOS

Similar to the StackHawk scan, add the interpret script into step 3 of the Workflow.

+ Add Step -> Shell Script

Name: Shell Script

Script Type: Bash

Execute on Delegate: checked

Delegate Selector: ec2

Script:

#Change Folder

cd ~/stackhawk-scans

#Parse Results

export TotalHighViolations=$(grep -i '\<Risk' scanresults.txt | grep -c -i 'High')

echo "Total High Violations: " $TotalHighViolations

#Deployment Logic

#Exit if High Violations Match

if [[ $TotalHighViolations -gt 0 ]] ; then

echo "High Violations Preventing Deployment"

exit 1

else

echo "Moving Forward with Deployment"

exit 0

fi

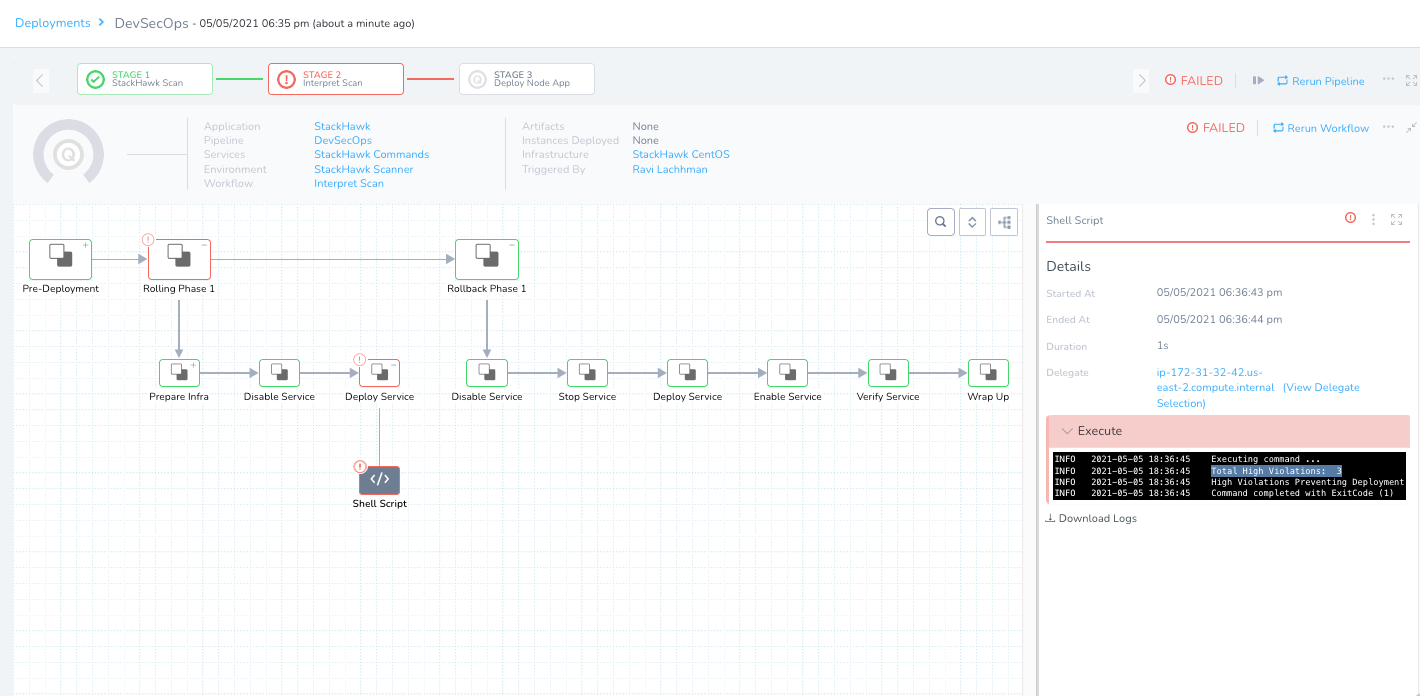

Click Submit and the interpretation is wired. The script will interpret the scan results from the StackHawk scan console output, and if there are High Violations, stop the deployment.

The last step would be to stitch the scan, interpretation, and deployment together.

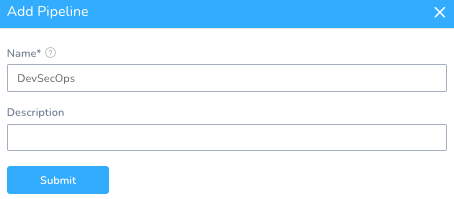

Operational DevSecOps Pipeline

The power of the Harness Pipeline is to stitch together granular steps to be a fully functional/operational DevSecOps pipeline.

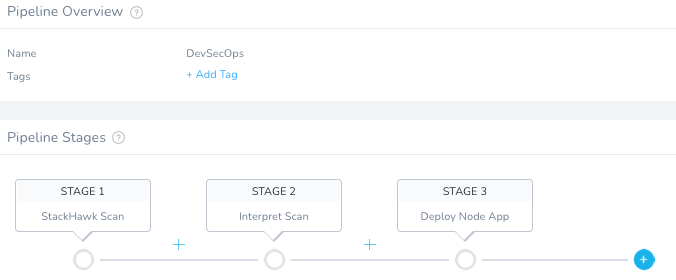

Creating a Harness Pipeline is simple. The order will be to have Pipeline Stages for the scan, then interpretation, then if hygienic, deployment.

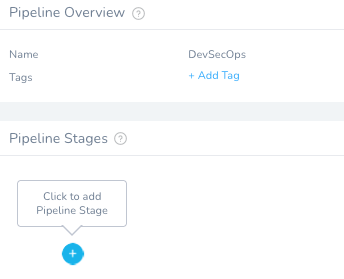

Setup -> StackHawk -> Pipelines + Add Pipeline

Name: DevSecOps

Once you hit Submit, you can add a Stage to every Workflow.

Click the + circle to add the first Stage, StackHawk Scan.

Click Submit, and add the other two; Interpret and Deploy.

Once done, your CD Abstraction Model is complete and filled out. You are ready to execute!

Running your DevSecOps Pipeline

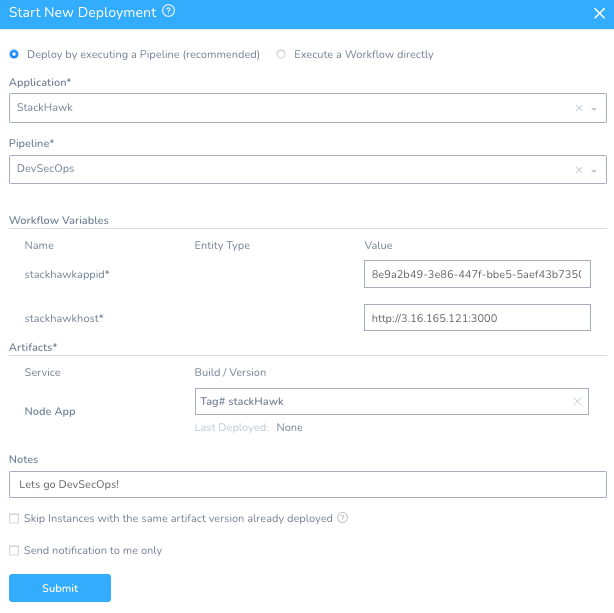

To run your example, head to the left-hand navigation and click Continuous Deployment, then Start New Deployment.

You will want to deploy “StackHawk” application and fill in your StackHawk AppID and running container address.

Application: StackHawk

Pipeline: DevSecOps

stackhawkappid: your_stackhawk_app_id

stackhawkhost: your_running_host_or_ip_address

Artifact/Node App Tag: your_tag [or #stackHawk if using the example from rlachhman]

Click Submit – you are now off to the races! Note: the Pipeline will fail because this app is pretty vulnerable. You can see in the second Stage that the deployment has been blocked.

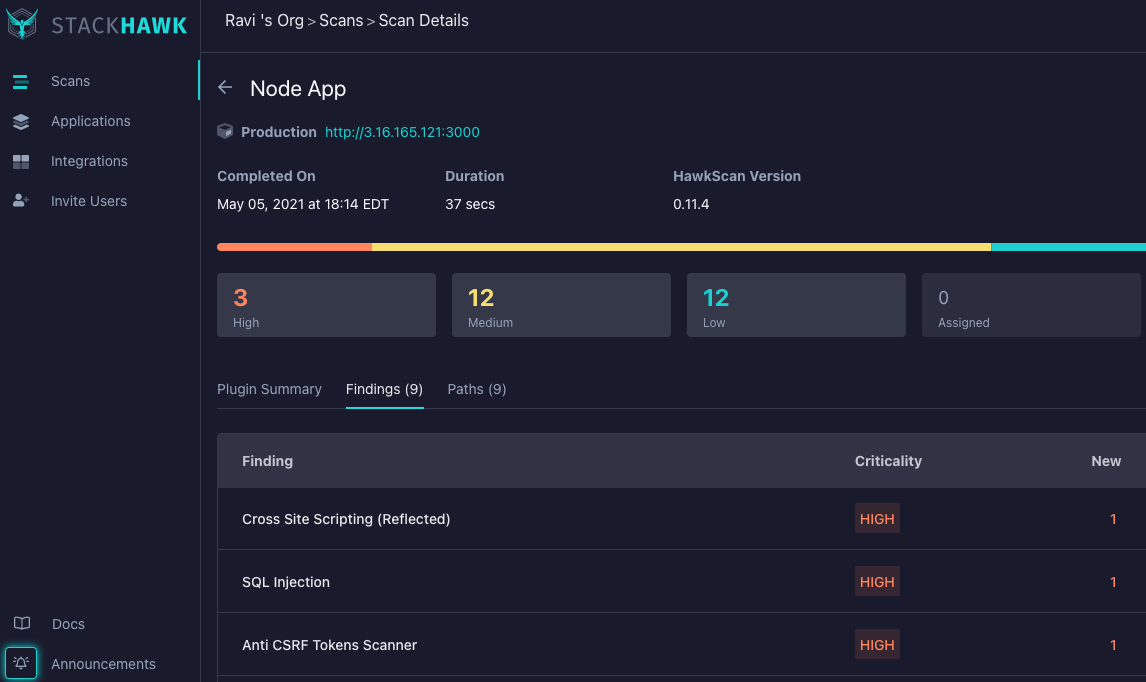

Head back to the StackHawk Console – you can now triage the vulnerabilities.

Happy DevSecOps-ing! With StackHawk, you can acknowledge the vulnerabilities and rerun your pipeline for a successful deployment.

DevSecOps and Harness: Better Together

Harness, as the premier software delivery platform, can help you realize your DevSecOps goals. As an unbiased way of integrating new technologies and scanning methodologies, the Harness Platform can be the conduit for paradigm shifts that happen in software delivery. Make sure to sign up for a Harness Account today!

Cheers,

Ravi

This post was written by Ravi Lachhman. Ravi is an evangelist at Harness. Prior to Harness, Ravi was an evangelist at AppDynamics. Ravi has held various sales and engineering roles at Mesosphere, Red Hat, and IBM helping commercial and federal clients build the next generation of distributed systems. Ravi enjoys traveling the world with his stomach and is obsessed with Korean BBQ.