Though this approach is relatively new and can be cumbersome to pull off, we felt that from a security perspective, it was simply the right thing to do. The alternative is to bypass roles and to have a single account responsible for everything, which is faster but significantly less secure.

To properly implement this, we had to split accounts across infrastructure and deployment concerns, use Serverless Stacks on demand, and develop a CI/CD pipeline based around actions in GitFlow.

This is suggested as a best practice by Amazon, so they have created several articles on the topic including how to set up a cross-account pipeline and how to implement GitFlow using CodePipeline.

Their articles are very informative and addressed most of our needs. However, we chose to take a slightly different approach so we could get more separation in the environment for a smaller blast radius and more control over the promotion of code process.

Developing Accounts

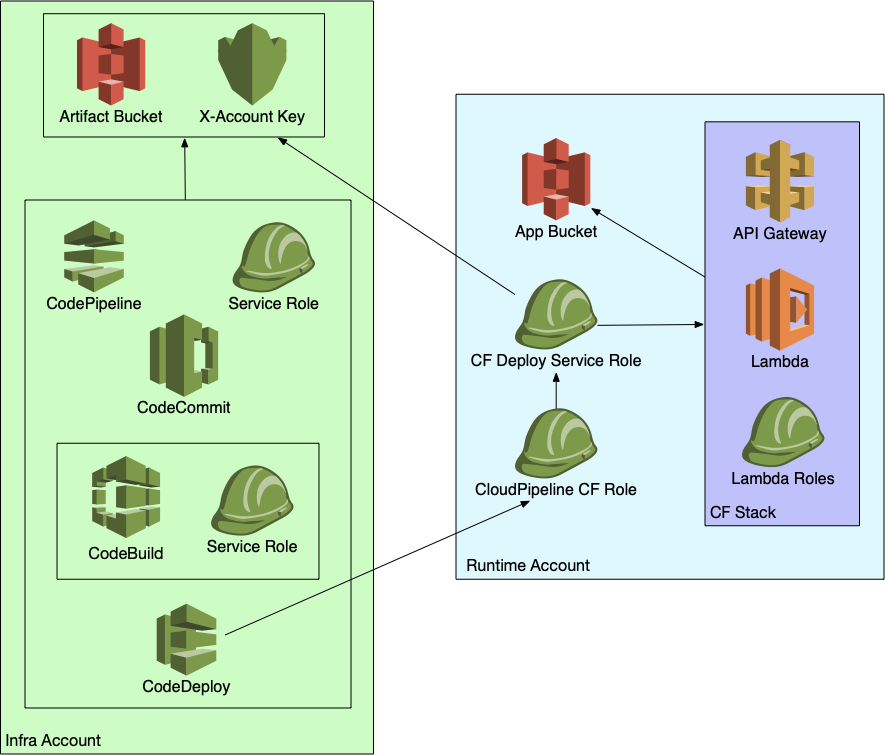

Rather than the standard DevAccount, ToolsAccount, TestAccount, and ProdAccount identities Amazon uses for cross-account pipelines, we centered our accounts around environments. Each environment has at least two accounts, an InfrastructureAccount and a RuntimeAccount.

InfrastructureAccount: The InfrastructureAccount combines the features of the DevAccount and ToolsAccounts Amazon recommends to orchestrate continuous integration and deployment.

RunTimeAccount: The RunTimeAccount is similar to the TestAccount and ProdAccount, which is where the target stack actually runs.

CodePipeline runs the creation and updating of stack resources from the Infrastructure Account, reaching into the Runtime Account to perform work as needed.

We also use the open-source tool Terraform to provision our AWS resources, a shift away from Amazon’s CloudFormation. It is worth noting, however, that we are using CloudFormation for other actions like Lambda deployment.

Cross-Account S3 Access

Granting permission for cross-account access between moving parts like these can be tricky. Our build artifacts need cross-account S3 access, which requires a custom KMS key shared between accounts. It’s important to pay close attention to where CodePipeline creates and updates the stack resources in the RuntimeAccount from the InfrastructureAccount.

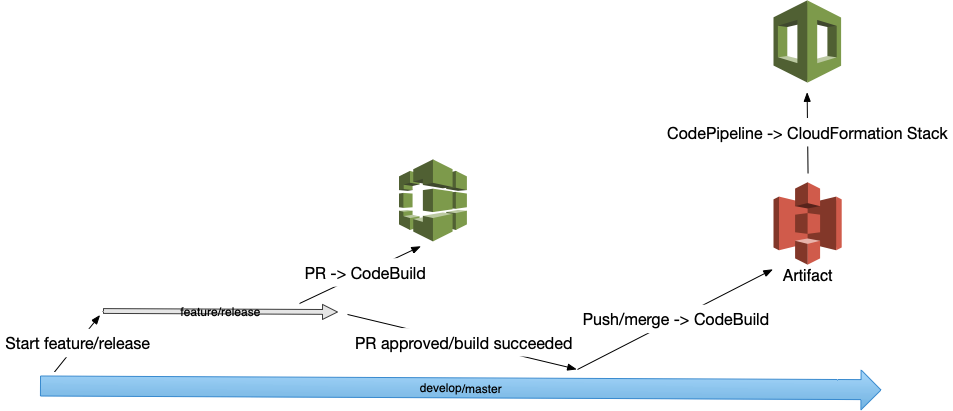

Amazon recommends permanent and temporary stacks that tie back to GitFlow, which nicely tied into our concept of environments. We deploy our develop branch to our Preprod environment, then master deploy to our Prod environment.

Additionally, we wanted the develop and master branches to automatically build and test each PR, but deploy only on pushes/merges coming in. We have branch protections in Github so that at least one other person has to approve a given PR, and those checks have to pass before merges can complete.

One issue we ran into with CodePipelines is their level of branch specificity. Simply, CodePipelines don’t have as much branch specificity for their Github webhooks as CodeBuild does. This makes it difficult to differentiate between pull requests vs pushes and merges, so we had to create a CodeBuild for each type with their own webhooks. The CodePipelines are triggered by the push/merge event writing the build artifact to S3.

Closing Thoughts

Along with dedicated stacks for the Preprod and Prod Environments, we are also able to spin up stacks on demand for things like dev testing, integration testing, etc. Perhaps the most fun part was deleting everything and watching the pipeline rebuild it all again!

Our mix of process and technology allows us to keep Preprod and Prod environments isolated via branches while bootstrapping and managing environments independently and safely.

By splitting up the Infrastructure and Runtime accounts for each Environment and integrating them with GitFlow into our CI pipeline, we have a more isolated and flexible mechanism for getting features to our users!